Portable Memory & Behavioral Signatures: the missing layer for AI personalisation

Everyone is talking about context engineering and clever memory‑management tricks inside models. It's great, it's pushing the boundaries of what models can do. How does this translate into tangible benefits for users though? When you realise that those improvements are ultimately about preserving and applying user context, you start to feel that that the real unlock for users isn’t more sophisticated retrieval inside the model, it’s allowing that context to travel with the user across different apps. True personalisation.

You’ve likely felt this yourself. You sign up for a new tool and get dragged through the usual five-page onboarding: your name, role, preferences, how you like to work. You fill it out again and again, across different apps. Each tool forces you to reconstruct a persona you’ve already expressed thousands of times elsewhere. There has to be a better way.

The interface that hasn't grown in 20 years

At the beginning of the year, I spent a lot of time thinking about what the next wave of authentication might look like in the age of AI with agents. I initially thought it might require a reinvention of login flows, only to realise that the future probably looks like a more granular, more expressive version of OAuth. As I came to that conclusion, I found myself facing the old paradigm of login screens and onboarding flows, staring at the same boxes we’ve used for two decades and wondering why none of them really changed at all since AI has come about.

Login screens today do one thing only: they verify that you are who you say you are. Email, password, maybe a profile photo if the service feels generous but nothing else. None of the behavioral patterns, preferences, context, or insights that an AI may have already learned about you elsewhere.

This made sense in Web 1.0/2.0, because the services holding your identity didn’t actually hold much else. Google or Apple could verify your email, but they didn’t understand how you think or work. This has quietly changed over the last two years. AI assistants like ChatGPT and Gemini are building extremely rich models of their users. You can call those behavioral signatures.

Behavioral Signatures

By behavioral signatures, I mean a compressed model of your habits/patterns (how you write, decide, schedule, what you read, search, and prioritise) rather than a raw log of everything you have ever done.

Here's how I'd place it in the whole Context/Memory definition blur:

- Context = the immediate working set

It refers to the immediate working set the model is currently conditioned on. It’s whatever is inside the prompt window at the moment of generation: the last few messages, any retrieved documents, system instructions, and recent tool outputs. This state is short-lived and task-specific; once the window moves on, the model no longer has access to it. In implementation terms, context is just the prompt content and other ephemeral inputs passed into the forward pass. - Memory = the persistent raw record

refers to persistent external storage the system can look up when needed. This includes logs, past chats, notes, files, database entries, or embeddings stored in a vector database. Unlike context, this information is not transient: it remains available across sessions, but it is usually raw or lightly structured rather than already interpreted or compressed. Technically, memory lives outside the model weights and is only used when the system explicitly retrieves it. - Behavioral signatures = the compressed model of you

This is the compressed, structured model of the user, derived from repeated exposure to context and memory over time. Think of them as learned features about you (prefers bullet points, often asks for edge cases, tends to work late evenings). They’re not more raw data, they’re the essence distilled from that data that the system can reuse across tasks and apps.

With behavioral signatures, for the first time, a login provider doesn’t just know who you are, but how you operate. This is why the login box is going to evolve from connecting your identity to connecting your brain/memory.

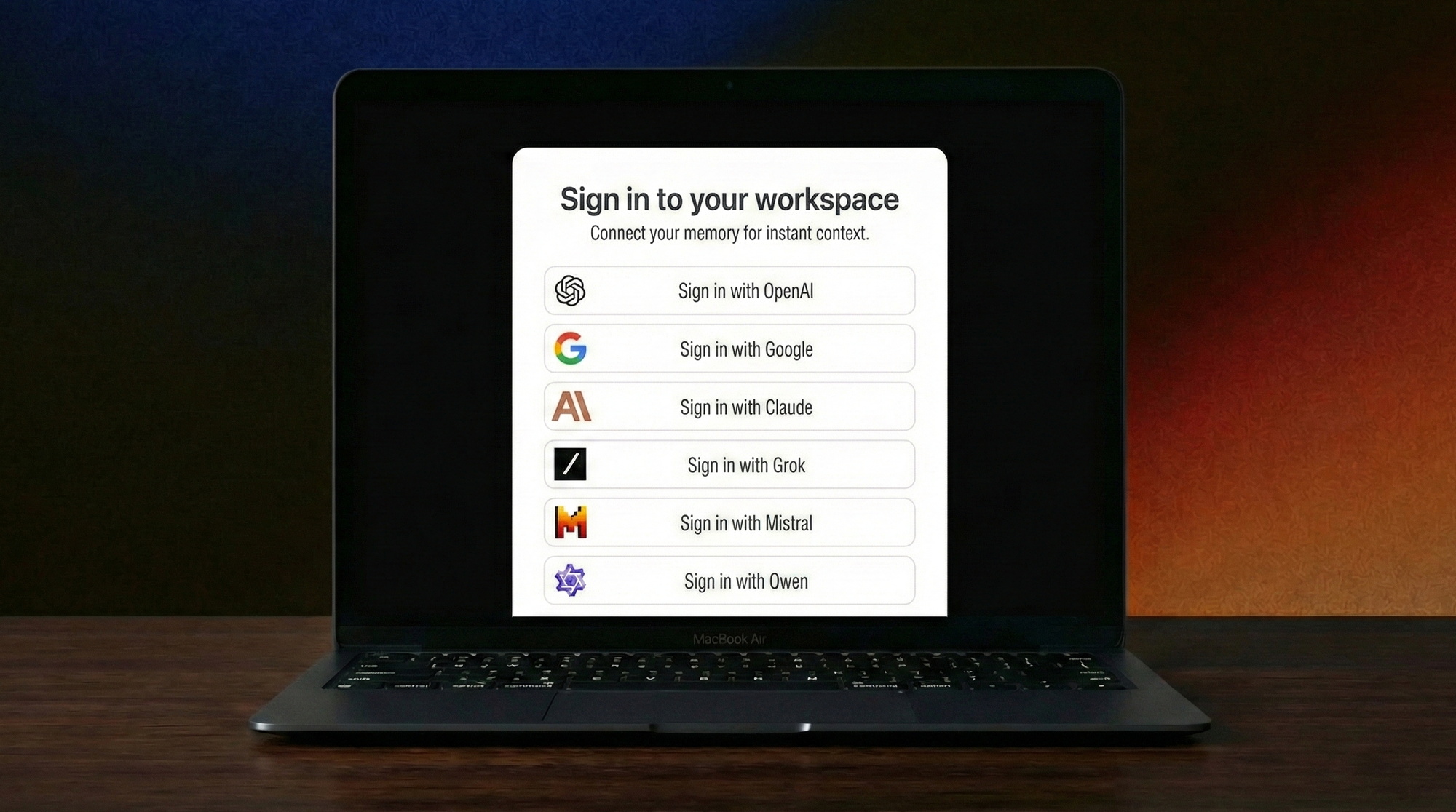

"Sign in with Google/Apple/OpenAI" will become an implicit way to "Sign in with your behavioral signatures." Logging into a new tool will no longer feel like introducing yourself from scratch but more like picking a memory provider that brings your context with you. Your preferences, tone, goals, personality, all imported with a simple click.

How portable memory unlocks personalised software

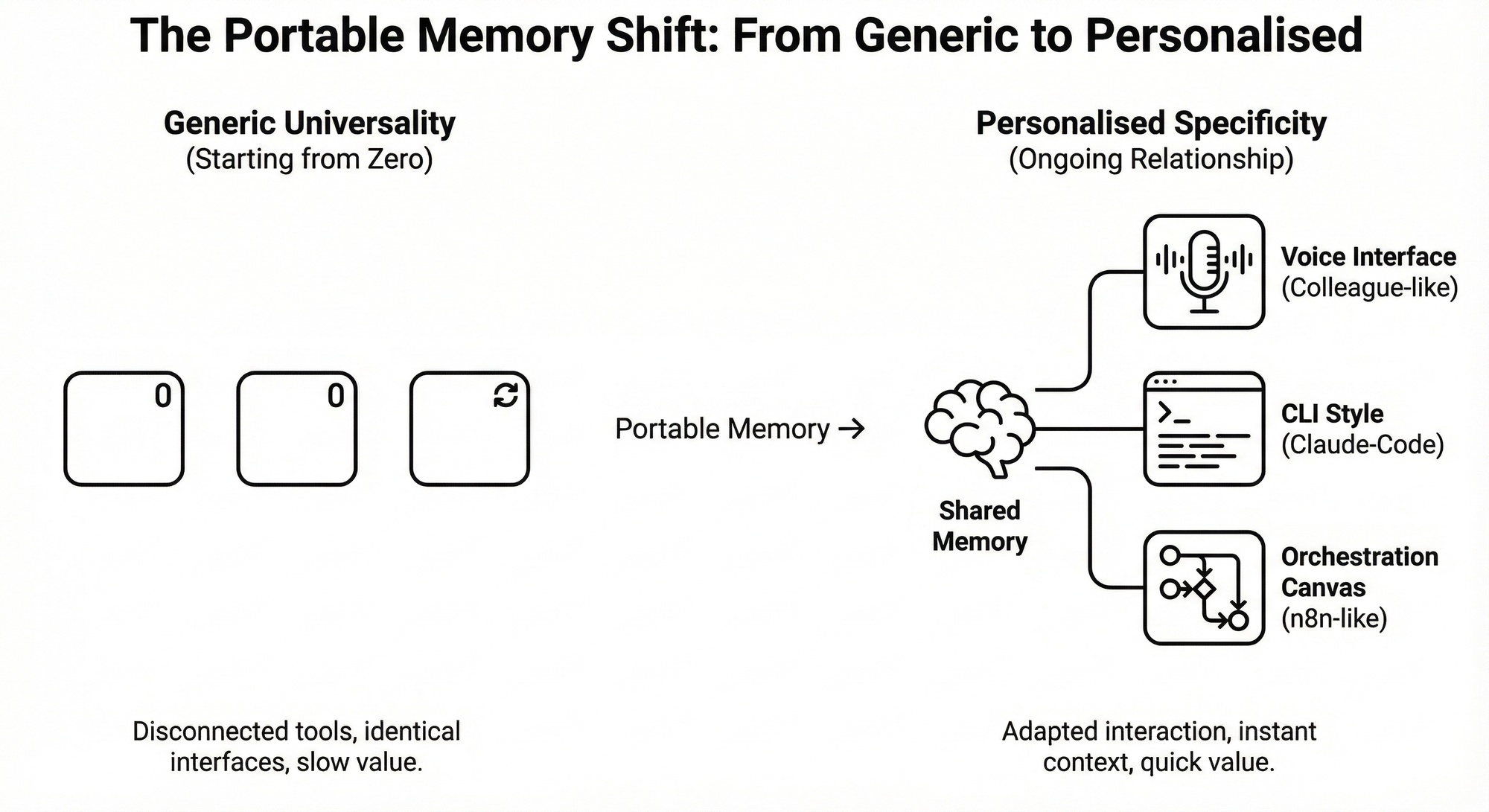

Portable memory doesn’t just speed up onboarding; it changes what software can be (insert hans zimmer music). Once an app knows your preferences and goals from the moment you log in, it can adapt its interface, workflows and recommendation engines to feel like it was built specifically for you. Two users opening the same product could effectively be opening two different interfaces: One tuned for a fast, minimalist operator, another for a reflective planner, another for someone who delegates heavily to agents.

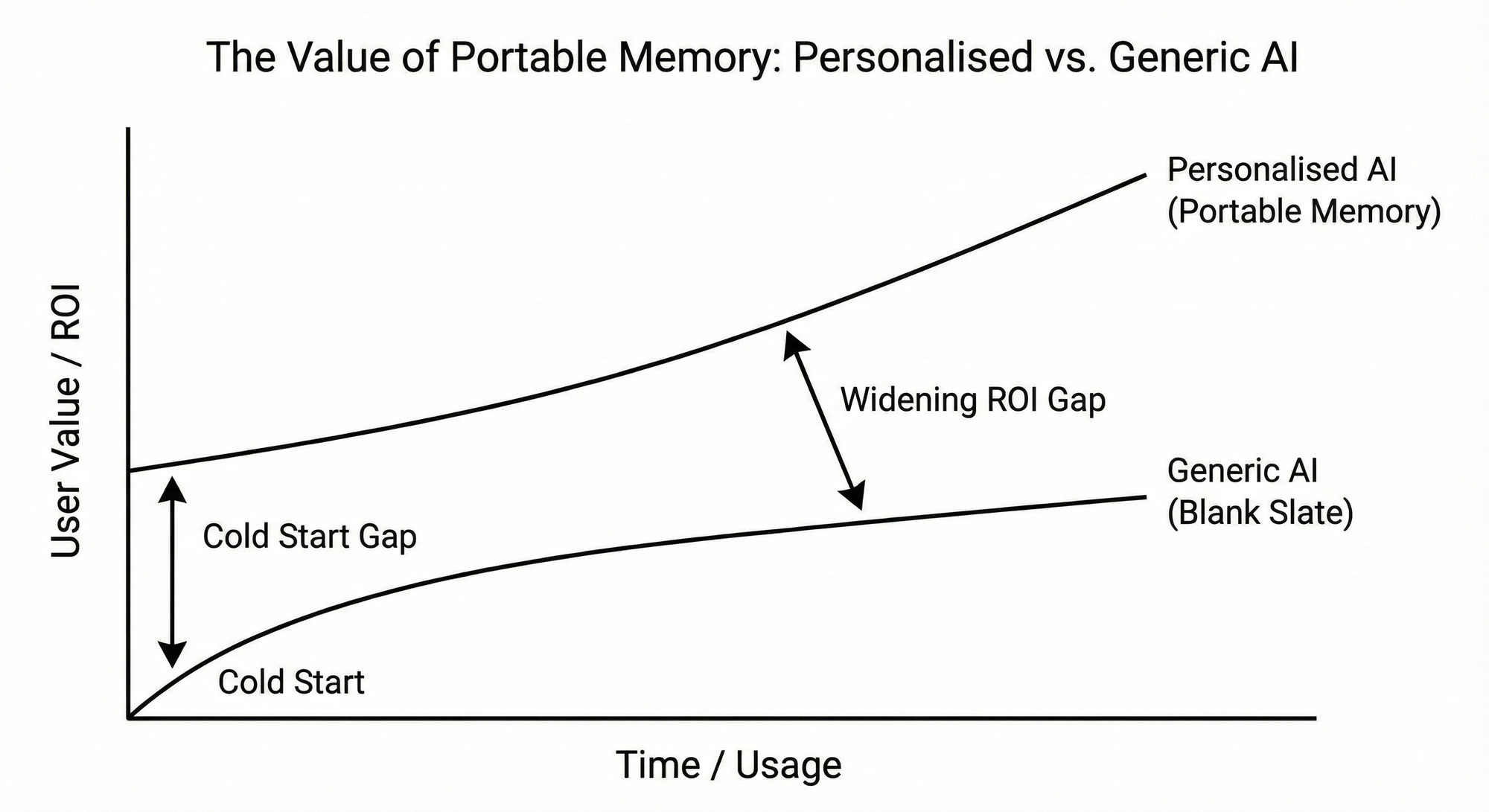

Portable memory is the missing foundation that lets software move from generic universality to personalised specificity. It turns every app into a continuation of your ongoing relationship with your AI, rather than a disconnected tool starting from zero. Crucially, this goes beyond "a personalised UI". Personalised AI changes both what gets surfaced and how quickly it provides value. It leans into the interaction style you’re most comfortable with: for some, that’s a voice interface that feels like talking to a colleague; for others, it’s a Claude‑Code‑style CLI, or an n8n‑like orchestration canvas.

The same behavioral signatures that guide what is suggested also determine how it shows up: as a conversation, a short command, or a structured flow. Over time, the gap in ROI between products that start from your behavioral signatures and those that start from a blank slate will only widen. The cold start of onboarding will disappear, and this will be the early innings of truly personalised software.

From "Sign in with Google" to "Sign in with my brain"

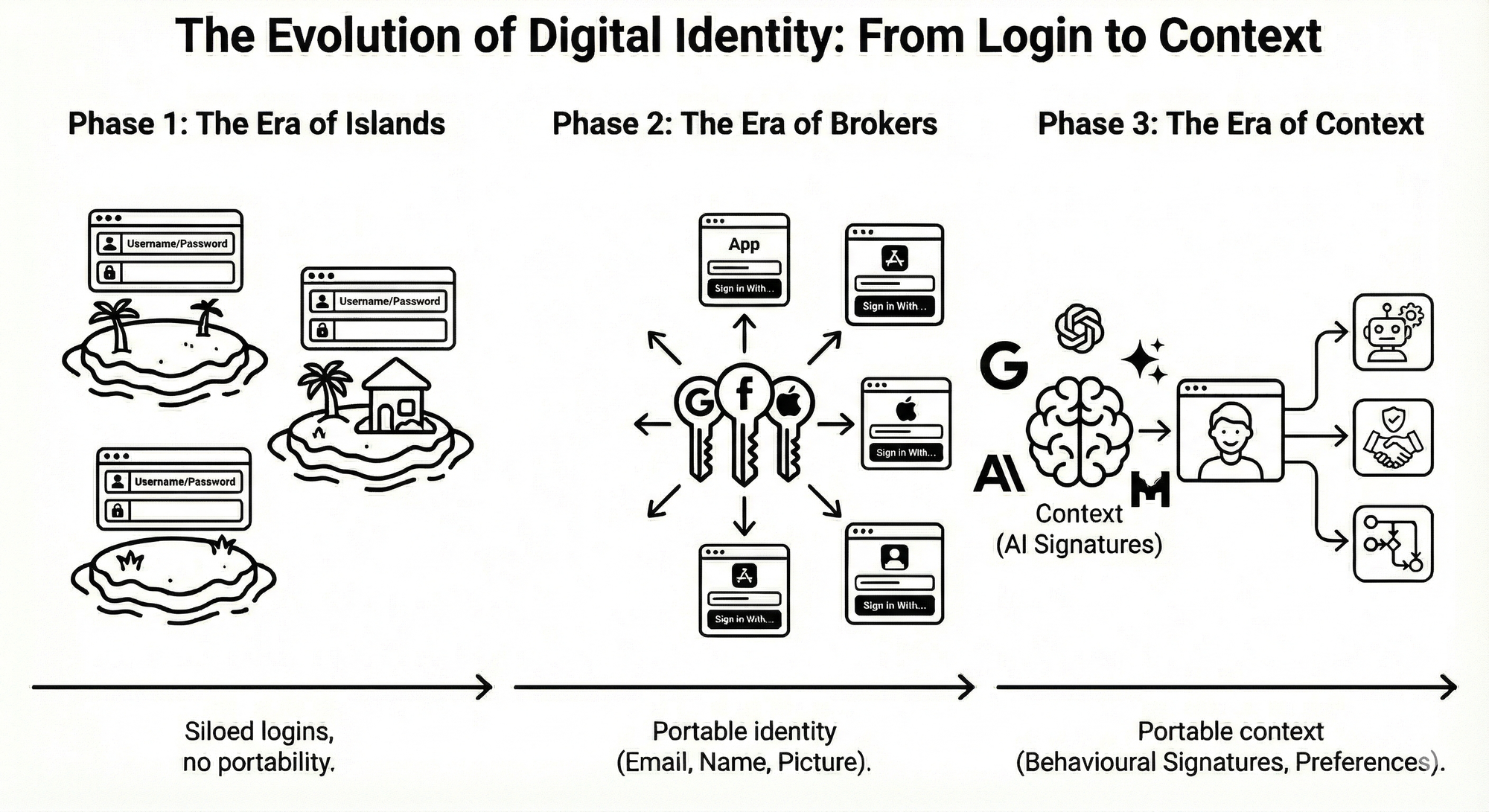

We’ve already lived through distinct eras of digital identity, but we rarely talk about how each shift quietly expanded what a login meant.

- In Web 1.0, every service was an island. You created a new username, a new password, a new profile. Nothing flowed between platforms because nothing could.

- Web 2.0 changed that. “Sign in with Google,” “Sign in with Facebook,” and later “Sign in with Apple” didn’t just simplify onboarding. They turned these social giants into global authentication brokers. Your identity became portable for the first time, even if the portability stopped at an email, a name, and occasionally a profile picture.

- Now we’re entering a third era for online identity. The shift is no longer just about outsourcing your login; it’s about outsourcing your accumulated context. Identity becomes fused with the behavioral signatures AI systems hold about you, and that context becomes something you can carry into other apps and services.

This shift massively increases the importance of consumer/prosumer AI winners. Whoever owns the most surface area of your digital life (personal and professional) will provide the richest memory graph. In this digital world, your memory graph, the organised set of behavioral signatures an AI has inferred about you, becomes the most valuable piece of information a product can access.

Two serious contenders: OpenAI and Google

Each of the big AI players now sees you through slightly different online personalities you project across their ecosystems, even if the lines are starting to blur as they are all shipping both consumer and enterprise offerings. If portable memory becomes real, it won’t be monolithic. The future almost certainly has multiple competing players but two seem already ahead:

- OpenAI’s strongest signals come from how you think and communicate: the drafts you work on together, your questions, your preferences, your reasoning patterns.

- Google’s strongest signals come from how you organise and operate: your email, calendar, documents, searches.

None of these boundaries are perfectly clean: OpenAI is pushing into productivity; Google has conversational agents; Microsoft’s Copilot blends both across Office and Windows. You can think of these systems as holding different projections of the same person: how you talk and reason, how you schedule and work, how you search and consume.

Portable memory is what lets a third‑party app tap into those different projections, with your permission. A new tool would almost certainly rely on whichever single memory provider you choose to authenticate with and welcome you with a personalised setup and UI.

The mounting pressure to make memory interoperable

"Why would OpenAI or Google allow third-party tools to tap into their memory graphs? Isn’t this their moat?"

I've got 3 reasons to believe this may change soon: