Attention Was All We Needed -Now It’s All We Lack

Intelligence Too Cheap to Meter

In 1954, electricity was hailed as "too cheap to meter" thanks to nuclear power. What a weird twist of events that, 70 years later, nuclear is now gearing up to power the very infrastructure that is creating intelligence "too cheap to meter".

Back in 2017, the paper Attention Is All You Need introduced the Transformer architecture, the engine behind today's Large Language Models. Since then, the cost of deploying intelligence has plummeted, turning complex reasoning and coding from rare flashes of genius into near-zero marginal-cost commodities.

In many ways, intelligence is no longer special. Once it’s trivial to spin up advanced reasoning, how do we differentiate?

It’s reminiscent of a line from The Incredibles, where Flash and Syndrome, at different points in the movie, talk about how “When everyone’s special, no one is.” Once extraordinary ability becomes commonplace, it stops being a competitive edge. This is happening across the product development lifecycle as we speak, and the question is where it ends.

In the context of Large Language Models, advancements in model architecture and computational resources have made high-level cognitive tasks widely accessible, leading to the integration of LLMs into everyday tools and workflows across various domains. One of the most successful applications of this technology has been in coding. Cursor, Windsurf, Lovable, Bolt, Replit, v0, Github Copilot and many others are relying on cutting-edge generative models optimised for code generation. If you haven't tried some of those tools you're doing yourself a disservice, even if you're non-technical (Bolt/Lovable/Replit will get you started with 0 coding xp).

Design and Taste Too Cheap to Meter

Historically, crafting a well-designed product required specialized UX research, brand strategy, and a refined aesthetic sense. Today, AI is on the verge of tackling both the aesthetic and functional sides of design. Tools like Midjourney can generate polished visuals, while specialized coding models produce production-grade front-end scaffolding in a few prompts.

What once demanded separate skill sets (graphic design and software engineering) can now be assembled by prompting an AI. While early code generation looked clunky, these systems increasingly rely on standardized libraries (shadcn, tailwind or other popular React kits) for slick, out-of-the-box UIs.

This is the only color palette you need to go from $0 to $1B ARR pic.twitter.com/q3HtQh0AW7

— Guillermo Rauch (@rauchg) February 24, 2025

On the downside, few builders know how to deeply customize these auto-generated interfaces, leading to a sea of near-identical designs. Is it a question of taste, or is taste itself just another function to be optimized?

one of the reasons why im skeptical of the impact AI will have on culture is that our judgments of taste are highly social. both of these aesthetics strike me as bad, and it has nothing to do with their inherent qualities, but rather what the aesthetics represent. https://t.co/qoXwwdG3ph

— derek guy (@dieworkwear) December 25, 2022

Yet, does taste even matter in most cases? If taste is a mix of cultural and social norms, I'm just not sure it matters when you're talking about building the majority of software. Take Craig, who logs auto parts in his inventory day-in, day-out. He couldn’t care less about the brand colors; he just wants to search for ‘brake pads’ without the UI glitching out.

so they definitely trained 3.7 on landing pages right?

— Sully (@SullyOmarr) February 24, 2025

probably the best UI I've seen an LLM generate from a single prompt (no images)

bonkers pic.twitter.com/B1sdCGsqHr

But while Craig doesn’t care, his experience is also shaped by a larger, unseen force; an increasingly homogenous design language dictated by AI. It's only a matter of time before a model fine-tuned on top-voted or award-winning sites figures out what truly resonates. Imagine feeding a model a Pinterest board of your favorite layouts and color palettes. Style transfer and personalization algorithms could spin up something professional and cohesive without you ever mastering design or code.

As design follows intelligence into the realm of commoditization, a sleek, on-brand product becomes table stakes. In a market saturated with visually flawless offerings, having great design is no longer enough.

Agency in a Multi-Agent World

If intelligence and design are both trivial, one might assume that human agency (vision, team-building, orchestrating projects) becomes the new differentiator.

If execution itself becomes cheap to meter, does human agency still hold an edge? Historically, strategy and orchestration were the final frontiers. Knowing "what to build" and "how to coordinate it" separated great founders from good ones. In a world of multi-agent systems, even decision-making and execution can be offloaded. When agents can spin up entire workflows, browse the web, and interact autonomously, what does agency mean anymore? Does it shift from "who can build" to "who can inspire, mobilize, and create conviction around a vision"?

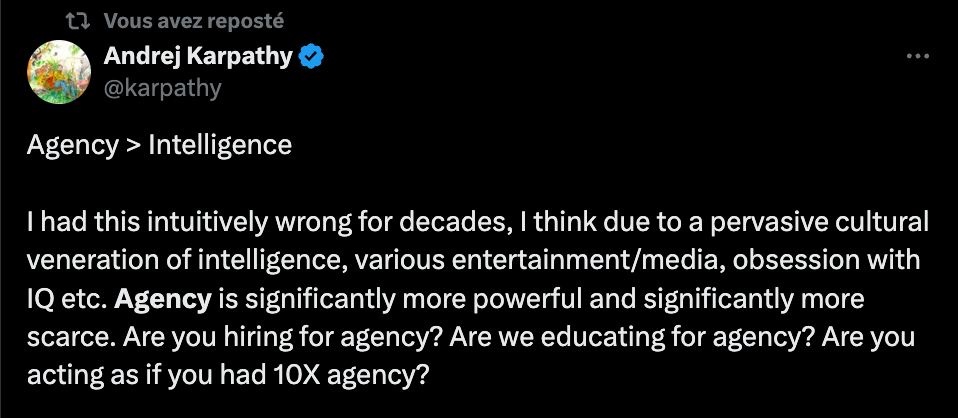

As Andrej Karpathy's tweet goes on to explain (thanks to Grok): "Agency, as a personality trait, refers to an individual's capacity to take initiative, make decisions, and exert control over their actions and environment."

Sounds pretty human right? Yet we now face the prospect of multi-agent systems that handle everything from scheduling to interacting to decision-making.

| Agency Trait | Multi-Agent System | Core Technology |

|---|---|---|

| Taking Initiative | Agents operate autonomously, starting tasks without waiting for direct human commands. | - Event-driven architectures - Reinforcement learning policies - Heuristic-based planners |

| Decision-Making | Agents evaluate multiple actions and choose the most optimal path using algorithms or learned behaviors. | - Planning algorithms - Utility/reward functions - Knowledge graphs & reasoning engines |

| Exerting Control Over Environment | Agents enact changes to their environment (digital but arguably soon physical too) to fulfill goals. | - APIs & tool access - Orchestration frameworks |

| Adapting to Context | Agents monitor the environment and adjust behaviors in response to real-time changes (feedback loops). | - Online Continuous learning |

Foundation models that can use external tools further push this boundary, enabling AI orchestrators to interface with the world, not just passively but actively.

Unless you've been living under a rock, you've likely noticed the rise of 'Deep Research' derivatives from platforms like Grok, Perplexity, and OpenAI, which are already helping white-collar workers streamline report creation. This trend is just the beginning. These tools are increasingly running behind the scenes to orchestrate complex workflows, information aggregation, advanced coding, and full-stack automation.

So what does the product layer even look like in a world dominated by AI agents? We’re still unsure. Some speculate an “absence of product,” where tasks happen entirely in the background, much like a cron job for everything.

In some ways, this is already the reality we're in with APIs, it just maybe wasn't as accessible to the average non-tech software user. Once multi-agent systems can run complex workflows, navigate websites, and connect to a growing suite of external tools, entire operations could be automated.

At this point, who, or what, gets authorized to run these agents? Agent authentication is becoming a critical question: who gets permission to spin up complex workflows, act on behalf of a business, or execute autonomous decisions? If you're working on anything similar, I'd love to share notes or ideas.

2 years ago, the Toolformer paper proposed the idea of having an LLM interacting with external tools. Today, that vision is already a reality. If execution becomes just as cheap to meter as intelligence or design, is strong human agency still enough to stand out?

Distribution: The Next Frontier

Even if you can assemble and execute flawlessly, the next question is how to get your product in front of the right people. Once functionality, aesthetics, and coordination all become trivial, distribution emerges as the final outward-facing frontier: making sure your offering resonates with its intended audience.

What will this trend look like, powered by AI? As I half-jokingly say: What will the world of software look like when every single employee that works at a SaaS venture-backed business can create over the weekend an 80/20 of the platform that they currently work for?

AI won't just contribute to flooding the market with products; it will also influence discovery. Perplexity, Grok, and future agentic systems aren’t just consuming information, they're curating, ranking, and deciding what deserves attention.

If AI can both generate content and dictate its reach, does distribution become a game of who can best train their models on human engagement patterns? Or does it shift back to pure relationships, where trust, not just algorithms, determines who wins?

Tools that automate content generation, targeting, and channel orchestration have also arrived, making distribution more accessible and, paradoxically, more competitive. The noise is deafening and it's not getting quieter any time soon. This is why I'm bearish on optimising for human engagement patterns. Trust will matter more than ever before.

In a world of abundance (or hyper-saturation..), genuine trust and community-building are becoming the real differentiators.

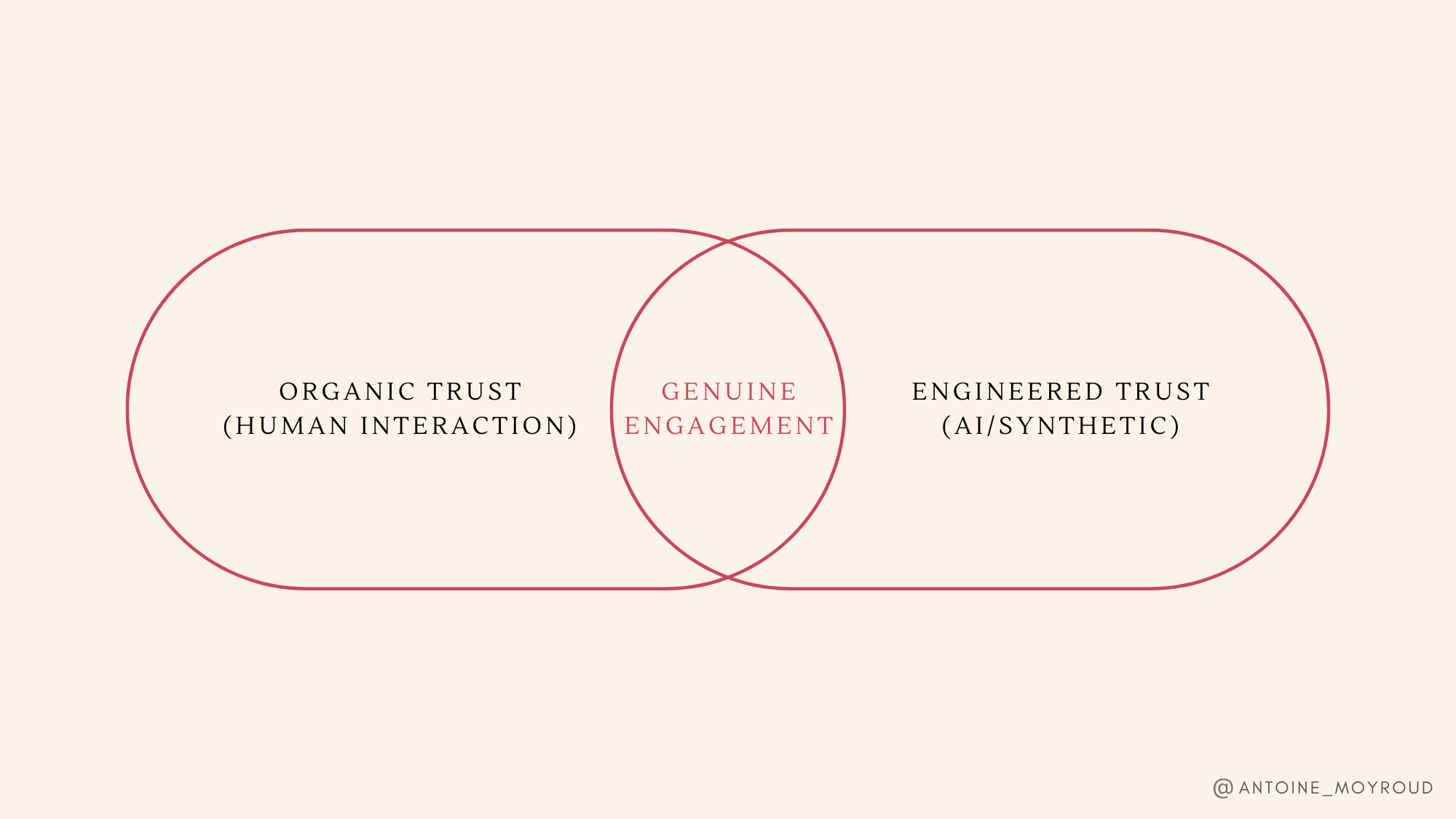

If software is abundant, trust becomes the new scarcity. Creator-led brands, invite-only communities, and high-signal micro-networks are emerging as antidotes to an oversaturated digital landscape. When every product looks the same and every sales email is AI-generated, the only real advantage is relationships. But what happens when even those relationships become synthetic?

We're already seeing platforms where millions spend hours interacting with AI-generated personas; some indistinguishable from real people, others explicitly fictional yet still forming deep, para-social bonds. If audiences willingly engage with and even trust artificial entities over humans, does trust remain a function of authenticity, or does it simply become another optimization problem?

At what point does the line between organic influence and engineered engagement disappear? If people prefer synthetic relationships to real ones, what does that mean for the last human moat? For now at least, I'd rather see the optimistic view with human relationships, the truth is that we'll probably land somewhere in between.

We are seeing the rise of a new creator economy, where genuine community builders from celebrities to micro-influencers leverage their owned audiences, monetizing and distributing products directly to fans who already trust them. The folks at Slow Ventures recently announced a fund to address this specific trend.

It’s not “VC” it’s just us. https://t.co/ZDg4BZKtwa

— yoni rechtman (@yrechtman) February 15, 2025

Even seamless distribution doesn’t guarantee that people will genuinely care. With so many flawlessly marketed products competing for the same eyeballs, we face a deeper paradox, one that returns us to the title of that original paper: attention is all you need...

Intelligence is cheap, Attention is priceless

Attention Is All You Need, originally described a neural net breakthrough. Attention, in a human sense though, has emerged as the last true scarcity. If attention is all you need, it's all we lack in the world of infinite products. We may witness an explosion of new software, products, content, and experiences, all “intelligent,” impeccably designed, seamlessly executed, and broadly distributed, each fighting for our finite focus.

No matter how advanced AI becomes, it cannot manufacture more hours for additional human engagement. It can optimize, manipulate, or fight for attention but it can’t create more of it.

Borrowing from Herbert Simon’s concept of the attention economy, “A wealth of information creates a poverty of attention.” We have only 24 hours in a day. We’ve now reached a moment where intelligence, design, agency, and distribution are all increasingly commoditized. With the remaining “frontiers” also subject to the same forces, the question shifts from Can we build something compelling to How will anyone care?

The Final Bottleneck

Ultimately, Attention Is All You Need may be more than just a seminal research paper. It also serves as a reminder that genuine human attention remains the final bottleneck, one scarce resource AI cannot replicate or conjure out of thin air.

Congrats! You've survived the article. Your attention is the only thing AI can't replicate, so use it wisely. Now, enjoy some sludge content. Or consider subscribing to get those sporadic blog posts sent straight to your inbox 🙃.